Source: Wikipedia

Introduction:

Think outside the box!

Divergence theorem

The divergence theorem states that the outward flux of a vector field through a closed surface is equal to the volume integral of the divergence over the region inside the surface. Intuitively, it states that the sum of all sources (with sinks regarded as negative sources) gives the net flux out of a region.

Suppose V is a subset of (in the case of

,

represents a volume in 3D space) which is compact and has a piecewise smooth boundary

(also indicated with

). If

is a continuously differentiable vector field defined on a neighborhood of

, then we have:

- Line integral

- Green's theorem (2D form of divergence theorem)

, where

is a region bounded by

- Vector calculus

Model-free Estimation

Methods to estimate a policy when you cannot get access to the environment MDP (e.g. the transition probability, the reward function or the entire state/action space).

- Temporal Difference Learning (incomplete episode)

- Monte-Carlo Learning (complete episode)

Generative topographic map (GTM)

Generative topographic map (GTM)

is a machine learning method that is a probabilistic counterpart of the self-organizing map (SOM), is probably convergent and does not require a shrinking neighborhood or a decreasing step size. It is a generative model: the data is assumed to arise by first probabilistically picking a point in a low-dimensional space, mapping the point to the observed high-dimensional input space (via a smooth function), then adding noise in that space.

Paper link: GTM: The Generative Topographic Mapping

When using a linear mapping from code space to the data space , the decoded distribution should be

, where

. It can be optimized by maximizing the log likelihood

. If

is a guassian distribution, then

declines to a constrained Gaussian mixture model. To design a SOM-like non-linear mapping, they let

. EM is used to optimize this model.

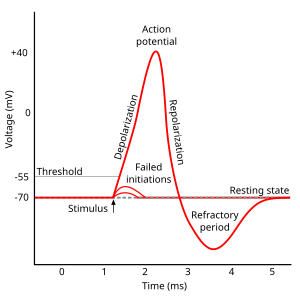

Refractory Period

recording of an action potential showing

the various phasesthat occur as the wave

passes a point on a cell membrane.

Poisson spiking assumes that each spike is independent. However, there exists in neurons a refractory period that prevents the cells from spiking immediately after a previous spike has just occurred. This period is about 10ms.

In physiology, a refractory period is a period of time during which an organ or cell is incapable of repeating a particular action, or (more precisely) the amount of time it takes for an excitable membrane to be ready for a second stimulus once it returns to its resting state following an excitation.

Hippocampal replay

Hippocampal replay

is a phenomenon observed in rats, mice,[1] cats, rabbits,[2] songbirds[3] and monkeys.[4] During sleep or awake rest, replay refers to the re-occurrence of a sequence of cell activations that also occurred during activity, but the replay has a much faster time scale. It may be in the same order, or in reverse. Cases were also found where a sequence of activations occurs before the actual activity, but it is still the same sequence. This is called preplay.

The phenomenon has mostly been observed in the hippocampus, a brain region associated with memory and spatial navigation. Specifically, the cells that exhibit this behavior are place cells, characterized by reliably increasing their activity when the animal is in a certain location in space. During navigation, the place cells fire in a sequence according to the path of the animal. In a replay instance, the cells are activated as if in response to the same spatial path, but at a much faster rate than the animal actually moved in.

Backward replay during awake and forward replay during sleep

Previous research in Foster's lab revealed that rats actually "envision" their routes in advance through sequential firing of hippocampal neurons. And the researchers knew that sometimes, during pauses, rats replayed those sequences in reverse—but no one knew why. (https://hub.jhu.edu/2016/08/25/rat-study-brain-replay/)

Hebbian Learning

Hebbian Learning

Hebbian learning is one of the oldest learning algorithms and is based in large part on the dynamics of biological systems. A synapse between two neurons is strengthened when the neurons on either side of the synapse (input and output) have highly correlated outputs. In essence, when an input neuron fires, if it frequently leads to the firing of the output neuron, the synapse is strengthened. Following the analogy to an artificial system, the tap weight is increased with high correlation between two sequential neurons.

I knew Hebbian Learning for a while as well as other interesting training methods like Optimal Brain Surgeon. My first feeling about Hinton’s paper about capsules is that he just re-used the Hebbian Learning to train a neural network. The correlations between capsules resembles the ones in Hebbian Learning.

Priming

Priming

is an implicit memory effect in which exposure to one stimulus (i.e., perceptual pattern) influences the response to another stimulus, without conscious guidance or intention.

alpha motor neurons

Afferent Inputs:

- upper motor neurons (Autonomic control)

- sensory neurons (e.g. Patellar Reflex)

- interneurons

- Renshaw_cell

- They receive an excitatory collateral from the alpha neuron's axon as they emerge from the motor root, and are thus "kept informed" of how vigorously that neuron is firing.

- They send an inhibitory axon to synapse with the cell body of the initial alpha neuron and/or an alpha motor neuron of the same motor pool.

- Renshaw_cell

Motor control simulator frameworks like Mujoco just consider the joint as a hinge or slide, which is lack of Antagonistic. Deepmind’s latest research shows that activating the sensor on the finger can help learning complex tasks in simulation, which is more reasonable than training the agent that has no self-awareness from scratch.

Central pattern generator

Central pattern generators are biological neural networks that produce rhythmic outputs in the absence of rhythmic input. They are the source of the tightly-coupled patterns of neural activity that drive rhythmic motions like walking, breathing, or chewing. Importantly, the ability to function without input from higher brain areas does not mean that CPGs don’t receive modulatory inputs, or that their outputs are fixed. Flexibility in response to sensory input is a fundamental quality of CPG driven behavior.